The core of our operation is the cutting edge computing services we provide. These put our users at the forefront of scientific research and enable them to tackle challenges such as big data and physics simulations. Our team of specialists provides expert support for researchers who use our services, in English and French. To reach out with any question, simply email us at support@scinet.utoronto.ca.

Want to know more? Click on one of the links below to navigate to its section.

Advanced computing systems

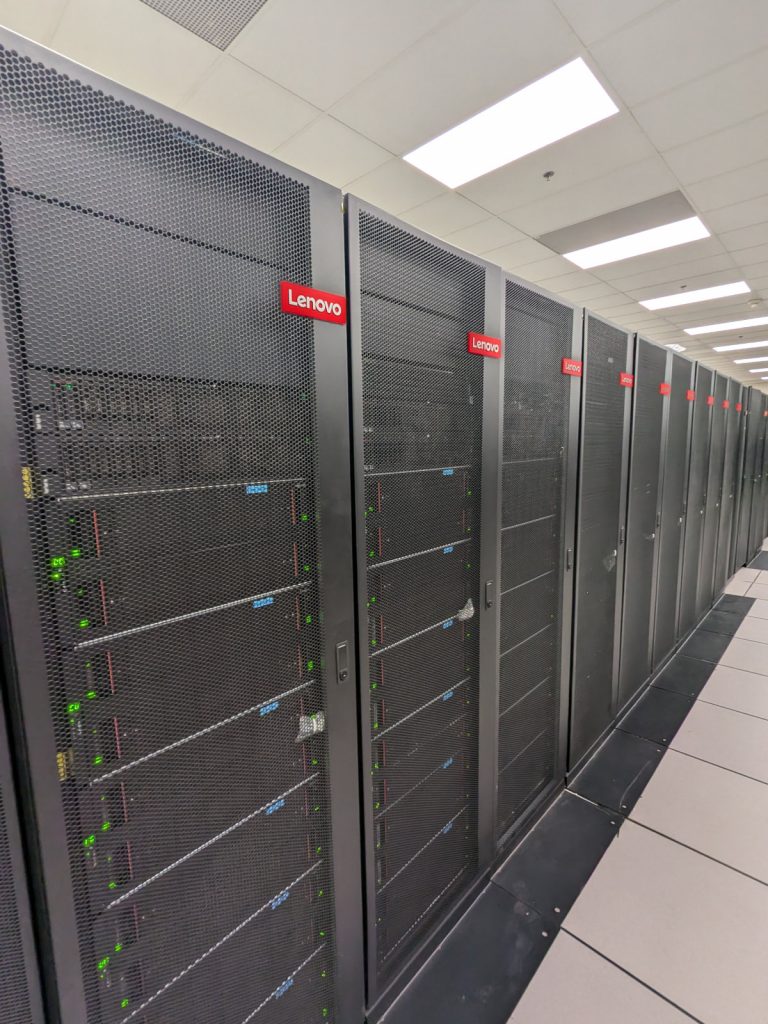

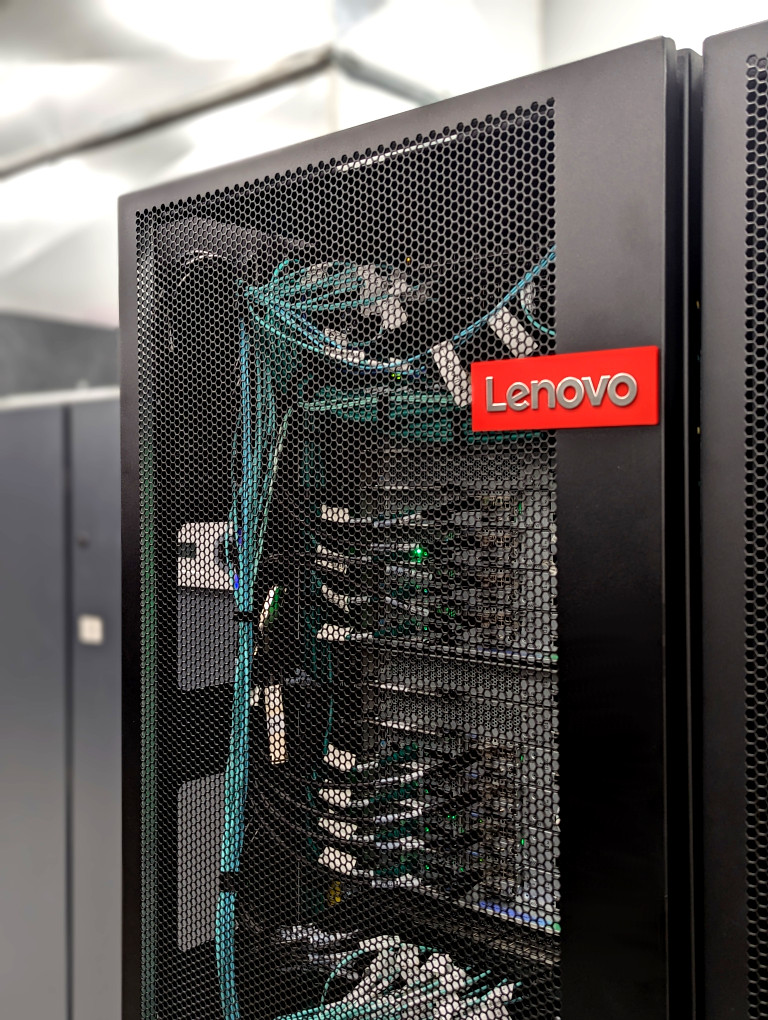

SciNet is Canada’s largest supercomputing centre, and we run and make available a range of computing resources for Canadian researchers and innovators.

More technical information and the system status can be found on the SciNet documentation wiki.

Niagara

Niagara is our 80,000-core supercomputer and main workhorse since 2018. This resource is available to all members of the Digital Research Alliance of Canada.

Mist

Mist is our main GPU cluster since 2020, and features 54 IBM POWER9 nodes with a total of 216 Nvidia Tesla V100 GPUs. This resource is available to all members of the Digital Research Alliance of Canada.

Rouge

Rouge is a smaller GPU cluster donated by AMD in 2021. It features 20 nodes with a total of 160 AMD Radeon MI50 GPUs. This resource is available to all members of the University of Toronto.

Balam

We operate the Balam GPU cluster on behalf of the Acceleration Consortium at the University of Toronto, since 2023. It features 41 Nvidia Tesla A100 GPUs.

We also operate the Teach cluster as part of our Training & Education program.

★ Over the years, SciNet operated several advanced computing systems that have since been decommissioned. Read about them here.

High Performance Storage System

The High Performance Storage System (HPSS) is a tape-backed hierarchical storage system that provides a significant portion of the allocated storage space at SciNet. It is a repository for archiving data that is not being actively used. Data can be returned to the active filesystem on the compute clusters when it is needed. The storage capacity of this system is currently 90 PB.

For more information, see the technical documentation on the SciNet wiki

★ HPSS as well as SciNet other file system can be accessed through Globus.

S4H secure enclave

S4H (originally, SciNet4Health) is our solution for advance computing with sensitive data (such as private health information or proprietary technology). The environment offers a number of enhanced security features beyond what is generally found in academic or commercial data centres, and is compliant with level 2 of CMMC 2.0 (contingent upon audit).

This service is available to all members of the University of Toronto.

Read more here.

Commercial usage

Our high performance computing resources are offered free of charge to researchers in Canadian institutions. To meet the needs of former academics and their collaborators to continue using our infrastructure, we offer commercial usage at a competitive fee. Commercial users enjoy 40 dedicated Neptune nodes and receive the same level of dedicated support from our team as academic users.

JupyterHub

Using our JupyterHub is a great way for users to access and explore their data stored on the SciNet file systems, using a web browser. For that purpose we have one dedicated large-memory node. To access this resource, simply open https://jupyter.scinet.utoronto.ca in your favourite browser.

Read more on our Wiki.

Databases

SciNet collaborates with the Map and Data Library (MDL) at the University of Toronto to host various databases on a dedicated PostgreSQL server node. The currently offered databases are Web of Science (by Clarivate) and the Canadian Intellectual Property Office (CIPO) database. Researchers can connect to these databases from our computing systems, such as Niagara, or from JupyterHub.

The Web of Science database is proprietary collection of the metadata from more than 90 million publications across 20,000 journals + books and conference proceedings (as of 2024). Access to the data is restricted to members of the University of Toronto, and further conditions apply. Read more here.